Strong interests in the cyberspace produce lots of highly sophisticated malicious software.

CYBERSPACE INHABITANTS

To enter the cyberspace means to probably be the target of thieves, hackers, activists, terrorists, nation-states cyber warriors and foreign intelligence services. In this scenario the strong competition in cybercrime and cyberwarfare continuously brings an increasing proliferation of malicious programs and an increment in their level of sophistication.

MALWARE PROLIFERATION

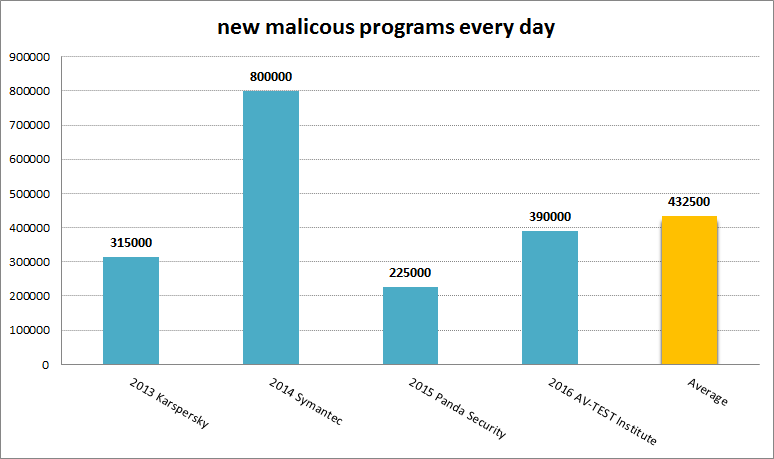

According to the data published by the major antivirus companies we have an average of 400000 new malware samples every day.

This data could be a little bit inflated by the antivirus companies but if we consider as true only the 2% of 400000, this means that we have 8000 new strains of computer malware per day in the wild.

Today it is impossible to live without digital technology, which is the base of digital society where governments, institutions, industries and individuals operate and interact in the everyday life.

So, to face the high-profile data breaches and ever increasing cyber threats coming from the same digital world, huge investments in information security are made around the world (according to Gartner in 2015 the spending was of above $75.4 billions).

But the security seems an illusion after hearing about the result of a research made at Imperva, a data security research firm in California.

A group of researchers infected a computer with 82 new malwares and ran against them 40 threat-detection engines of the most important antivirus companies.

The result was that only 5 percent of the malwares was detected. This means that even if the antivirus software is almost useless for fighting new malwares, it is necessary to protect us from the already known ones by increasing the level of security and protection.

EVERYONE COULD BE A TARGET

In the leakage involving Twitter on June 8th 2016 user accounts have been hacked, but not on Twitter's servers. This means that 32.888.300 users have been singularly hacked by a Russian hacker. This is amazing and underlines how easy it is to guess the users' passwords and to infect users' computers in order to steal users' credentials.

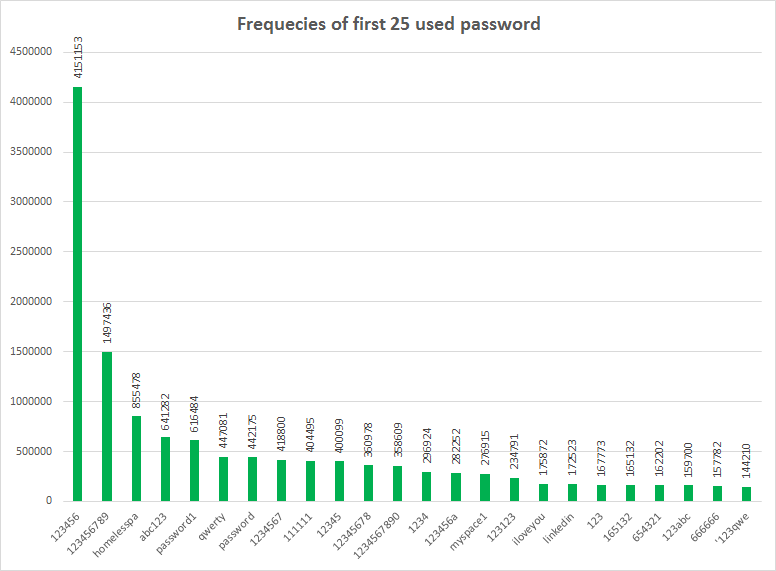

The password frequencies in the following chart show how users don’t pay too much attention to the passwords they use. In the chart we consider only the first 25th most used passwords. The statistic is done on 20210641 user accounts released from several leakages [04].

They probably think: why should I be hacked? I’m a normal ordinary guy, who cares about me? But what it is important for a bad guy is to get some profit. So, a huge quantity of accounts to sell in the dark market is a good reason to steal every Twitter user's credentials. In fact, the amount is the key factor which attracts the buyer.

Even if the chameleon attacks or the werewolf attacks are able to bypass easily the antivirus defense, it is important to pay more attention to our access keys to prevent the leakage of this huge quantity of user accounts because, I think, most of Twitter user accounts are simply guessed by the bad guy.

MALICIOUS SOFTWARE ANALYSIS

Malicious Software is characterized by four components:

- propagation methods,

- exploits,

- payloads,

- level of sophistication.

Propagations are the means of transportation of malicious code from the origin to the target. The propagation methods depend on scale and specificity. The target may be consituted by machines connected to the internet (large scale) this could mean for example that someone tries to create a bot-net. Or the target could be a small area network (small scale), for example if a company is going to be attacked for some reason.

Specificity could be connected to constraints placed on malicious code. If they are based on technical limitations they could be a particular operating system or a software version. If they are based on personal information they could be account credentials, details about co-workers or the presence of certain filenames on the victim's machine.

The level of propagation is directly proportional to the probability of detection and the limitation of defensive response.

Exploits act to enable the propagation method and payloads operation.

The exploit severity is indicated by the score (CVSS) assigned to a vulnerability.

The payloads is code written to manipulate system resources and create some effect on a computer system.

We can see that, today, there is an increase in the level of payload customization. We have payload for a web server, for a desktop computer, for a Domain Controller, for a smart phone, and so on. Every payload is tailored to a specific target in order to be very small and guarantee the maximum likelihood of success.

The level of sophistication of a malicious code can speak and tell us some useful information. MAlicious Software Sophistication analysis is an approach that can be used to figure out who is behind it: individuals, groups, organizations or states.

In this scenario we have, from one side generic malwares that are created by individuals or a small group who generally makes use of third-party exploit kits like Blackhole Exploit Kit [05], from the other side we have organizations or states with greater resources who can develop innovative attack methods and new exploits like Duqu 2.0 [06] the Most Sophisticated Malware ever seen.

The power between attacker and defender is strongly asymmetric. The defender needs huge quantities of resources to defend himself, even because he should operate in a proactive manner to fight against these kind of threats.

The study of malicious code is important to understand how attackers act in order to detect in progress attacks and to prepare a better defense response.

REFERENCES

[01] Trey Herr, Eric Armbrust, Milware: Identification and Implications of State Authored Malicious Software, The George Washington University, 2015;

[02] https://www.first.org/: CVSS: Common Vulnerability Scoring System;

[03] Marc Goodman, Future Crimes: Inside the Digital Underground and the Battle for Or Connected world, Anchor Books, 2015.

[04] https://www.leakedsource.com/: leaked databases that contain information of large public interest.

[05] https://en.wikipedia.org/wiki/Blackhole_exploit_kit: The Blackhole exploit kit is as of 2012 the most prevalent web threat.

[06] https://en.wikipedia.org/wiki/Duqu_2.0: Kaspersky discovered the malware, and Symantec confirmed those findings.