“ChatGPT is an artificial intelligence (AI) chatbot that uses natural language processing to create humanlike conversational dialogue. The language model can respond to questions and compose various written content, including articles, social media posts, essays, code and emails.” [04] Amanda Hetler

"People have used ChatGPT to do the following:

- Code computer programs and check for bugs in code.

- Compose music.

- Draft emails.

- Summarize articles, podcasts or presentations.

- Script social media posts.

- Create titles for articles.

- Solve math problems.

- Discover keywords for search engine optimization.

- Create articles, blog posts and quizzes for websites.

- Reword existing content for a different medium, such as a presentation transcript for a blog post.

- Formulate product descriptions.

- Play games.

- Assist with job searches, including writing resumes and cover letters.

- Ask trivia questions.

- Describe complex topics more simply.

- Write video scripts.

- Research markets for products.

- Generate art." [04] Amanda Hetler

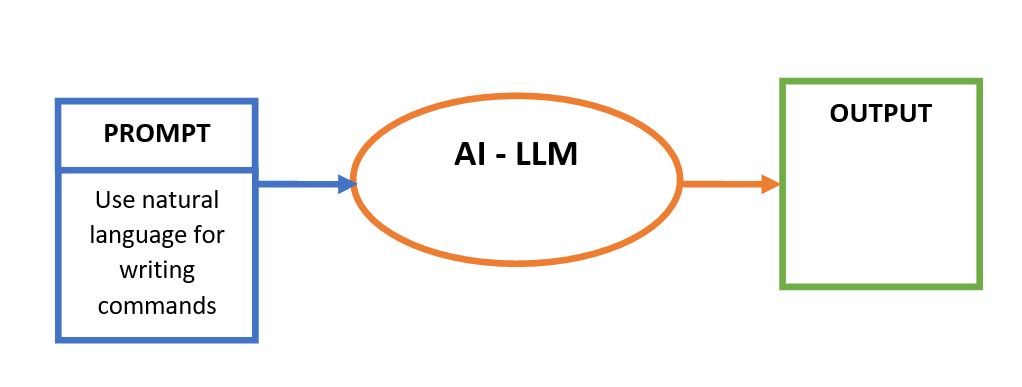

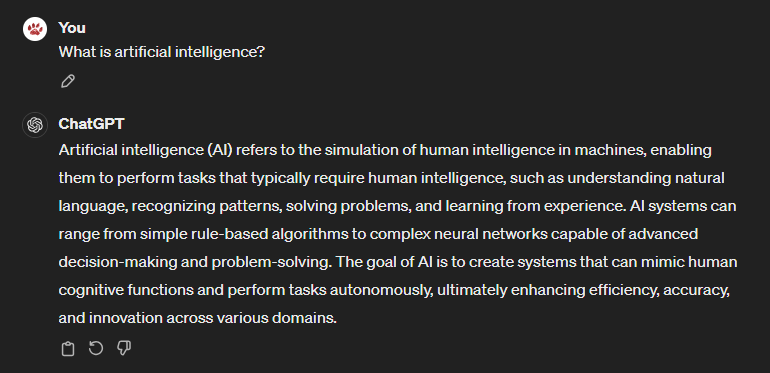

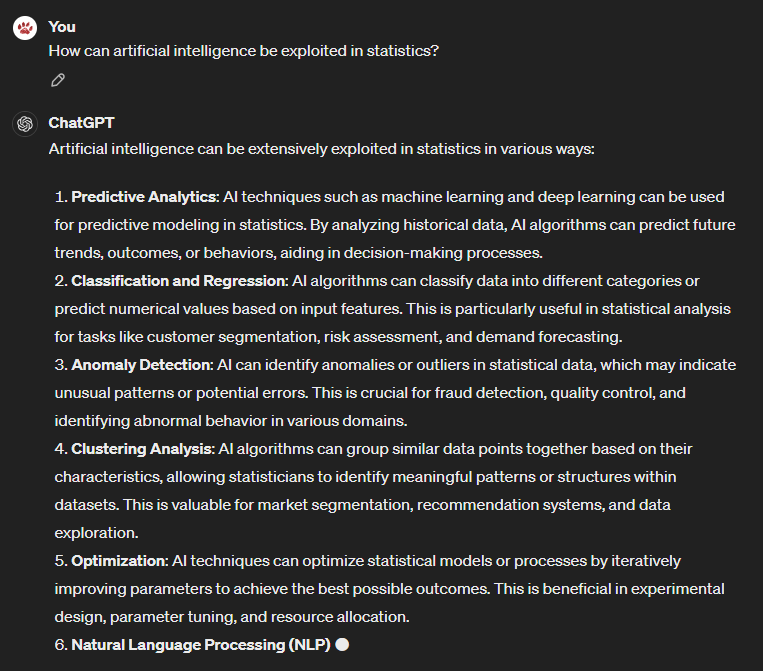

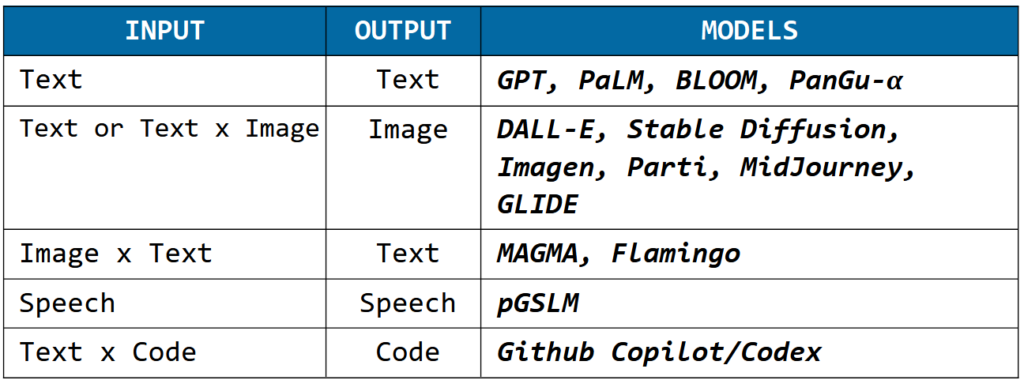

We can’t ignore the impact on our day-to-day activities of AI tools. They make the communication between humans and machines easier and simpler.

We can quickly have new insights and information on different fields of interests. But in order to improve the quality and the accuracy of responses we have to structure and phrase of the prompt appropriately.

It’s important to remember that ChatGPT doesn’t understand and doesn’t think, it generates responses based on patterns it learned during training.

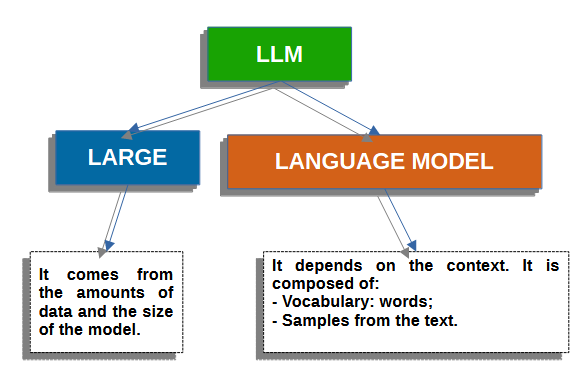

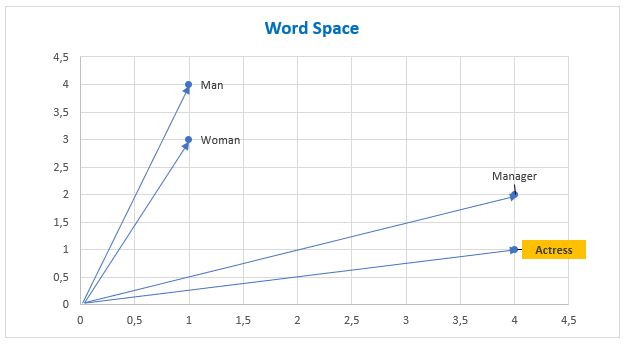

The engine of ChatGPT is based on the concept of “token”. GPT (Generative Pre-trained Transformer) model generates the tokens predicting the most probable subsequent token using complex linear algebra.

The model uses an iterative process. It generates one token at time and after generating each token, it revisits the entire sequence of generated tokes and processes them again to generate the next token.

WHAT IS PROMPT ENGINEERING

It is the art of creating precise and effective prompts to guide AI models like ChatGPT toward generating the most accurate, useful outputs. We have to bear in mind that Better Input means Better Output (BI-BO).

The prompt engineering is very important for creating better AI-powered services and obtaining useful results from AI Tools.

When you craft the prompt, it’s important to bear in mind that ChatGPT has a token limit (generally 2048 tokens), which include both the prompt and the generated response. Long prompts can limit the length of response, for this reason it is important to keep prompts concise.

Let us now analyze some techniques of prompt engineering.

INFORMATION-SEEKING PROMPTING

It is used to gather information and to answer question what and how. Examples of prompt:

- What are the best restaurants in Rome?

- How do I cook pizza?

CONTEXT-PROVIDING PROMPTING

The prompts provide information to the model to perform a specific task. Example:

Prompt: I am planning to celebrate the Italian Republic Day in the [COUNTRY] can you suggest some original ideas to make it more enjoyable?

COMPARATIVE PROMPTING

It is used to ask the model to compare and to evaluate different options to help the user make an appropriate decision. Example:

Prompt: What are the strengths and weaknesses of [Option A] compared to [Option B].

OPINION SEEKING PROMPTING

It is used to ask the model to get the AI’s opinion on a given topic. Example:

Prompt: What would happen if we use only public transport in Rome?.

DIRECTIONAL PROMPTING

If you ask the model for generic question, you receive generic answer. You have to define your prompt with clear instruction and precise and descriptive information.

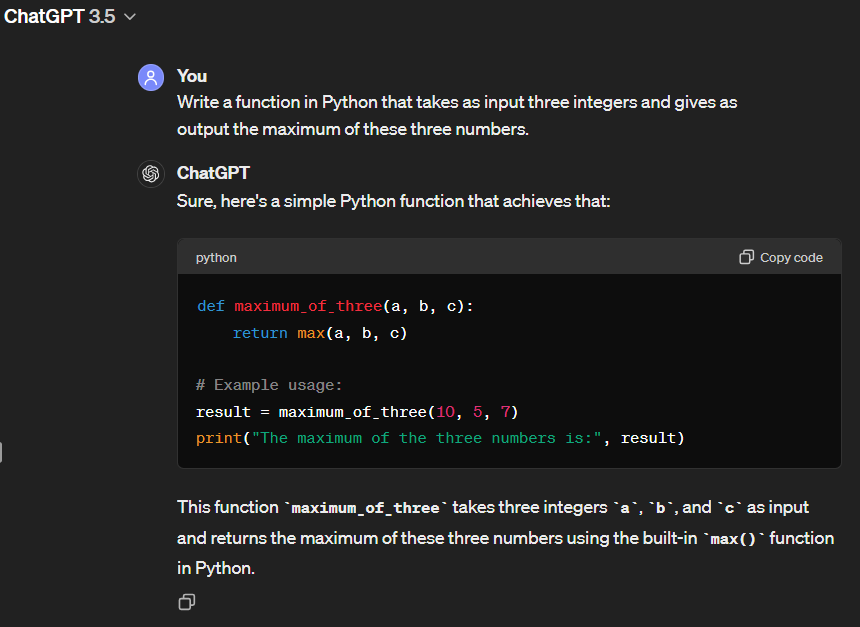

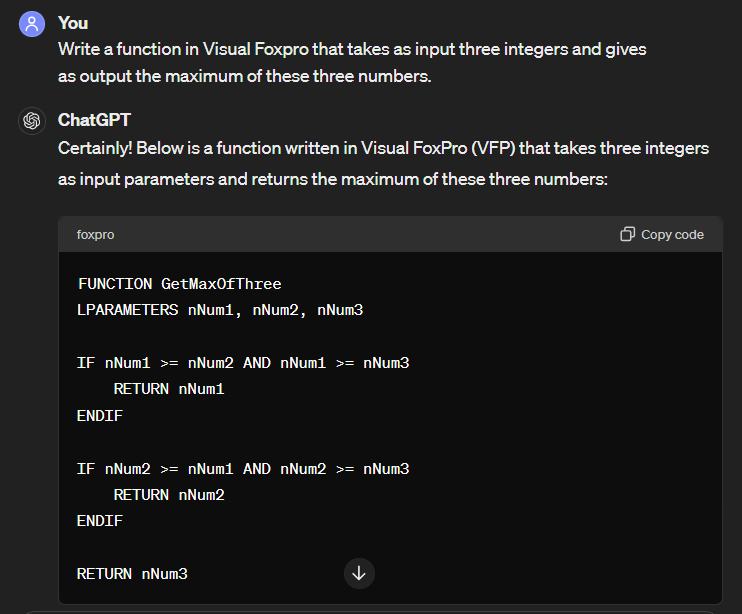

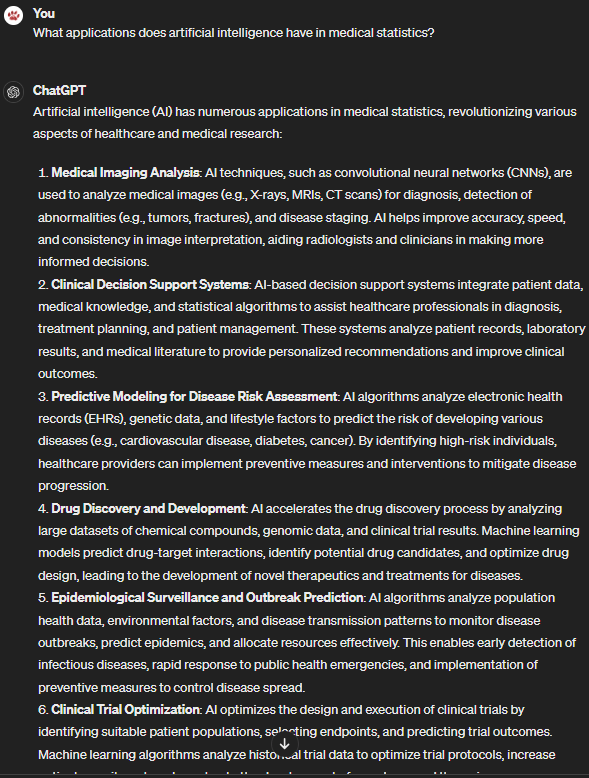

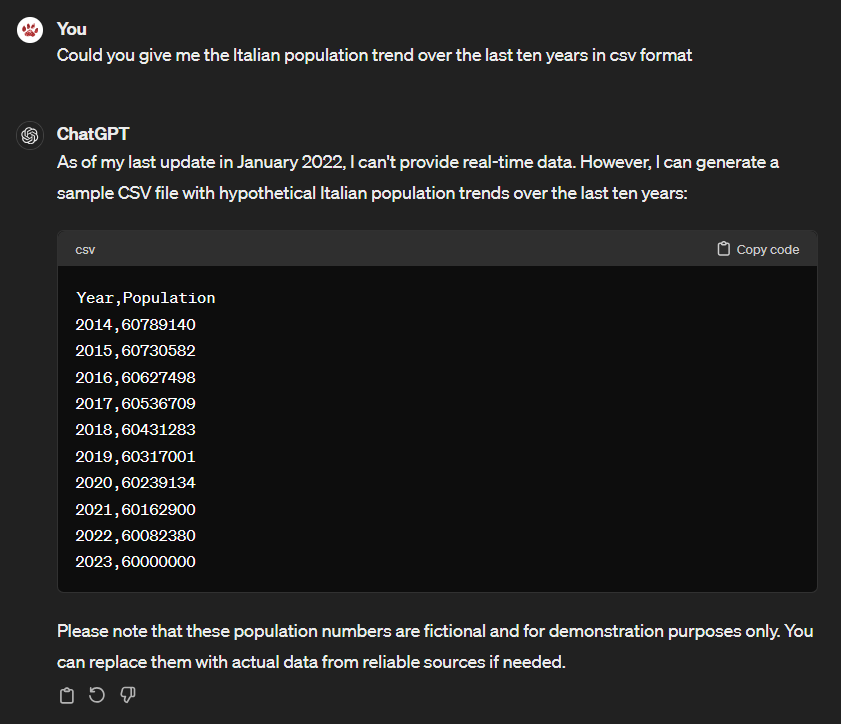

OUTPUT FORMATTING: CODE GENERATION

If you want to have a specific output or format of the output from ChatGPT, for example a program in Python or Visual Foxpro.

We try with a simple e specific request.

Prompt: Write a function in Python that takes as input three integers and gives as output the maximum of these three numbers.

ChatGPT 3.5 answer:

Prompt: Write a function in Visual Foxpro that takes as input three integers and gives as output the maximum of these three numbers.

Amazing, ChatGPT 3.5 answer:

VERY IMPORTANT

AI-generated code may need to be modified or tested before deploying it.

It is strongly recommended to:

- always modify and review the generated code to ensure it meets your specific requirements;

- use it as STARTING-POINT;

- test and check the code;

- it ALWAYS NEEDS HUMAN OVERSIGHT.

ROLE PLAYING

You can also act as someone else when you interact with ChatGPT.

If you add a role to the question, you make ChatGPT change the answer and the quality and the tone of the output. In this way we got much better information. Here it is a schema to use when you build a prompt related a role playing:

- WHO: you can ask ChatGPT to be what you want. You assign the role you need the model to play. A scientist, doctor, business man, chef and so on.

- WHEN: you can put the character at any moment in time;

- WHERE: you can put the character to a particular location or context.

- WHY: you want to dialogue with the character for whatever reason, motivations or purpose you want;

- WHAT: you want to dialogue with the character about what. That is the action you want the model to do.

We just need to verify the level of reliability and credibility given to this type of interaction.

Here is some practical examples.

Act as a character from a book:

Prompt: I want you to act like [character] from [book]. I want you to respond and answer like [character] using the tone, manner and vocabulary [character] would use.

Act as historical character:

Prompt: I want you to act as [historical character] to better understand the historical facts of that period.

Act as a political character:

Prompt: I want you to act as [political character] in order to ask as improving the quality of life of the people.

Act as a scientist:

Prompt: I want you to act as a scientist. You will apply your knowledge of scientific to propose useful strategies for saving the environment from pollution.

Act as a travel guide:

Prompt: I want you to act as a travel guide from Italy at the time of the Roman empire when Caesar was emperor. I will write you my location and you will suggest a place to visit near my location.

ZERO-SHOT PROMPTING

In zero-shot prompting, we use a prompt that describes the task, but it doesn't contain examples or demonstrations.

You use this prompt when you trust the model’s knowledge to provide a sufficient answer.

Prompt: Write a description of the Colosseum.

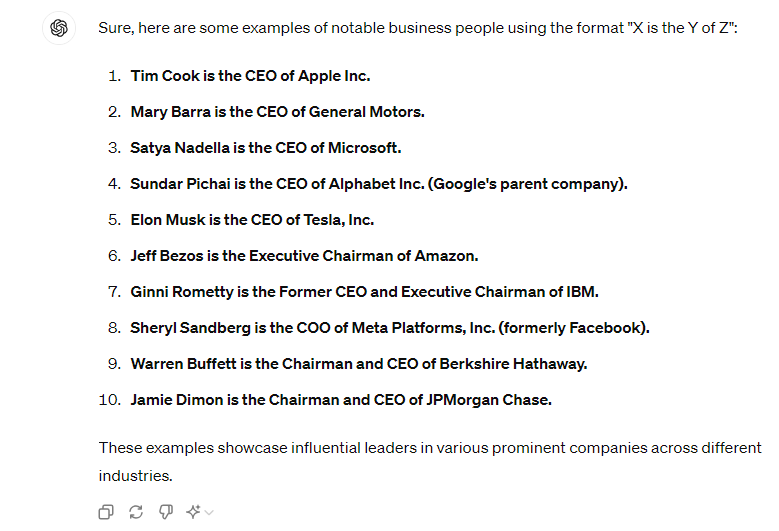

FEW SHOTS PROMPTING

It involves providing the model a few examples to guide its understanding of the desired outcome.

The example will be of:

- Knowledge extracting;

- And it’s formatting.

We can define the prompt like this:

Prompt: Here are some examples of each item of the list of best important business people.

- X is the Y of Z

- X -> [PERSON]

- Y -> [POSITION/TITLE]

- Z -> [COMPANY]

PUTTING ALL TOGETHER

You can also combine all these techniques:

- Directional prompting;

- Output formatting;

- Role based prompting;

- Few shots prompting.

CHAIN OF THOUGHT (CoT) PROMPTING

This technique encourages the model to break down complex tasks into smaller intermediate steps before arriving to conclusion. It improves the multi-step reasoning abilities of large language models (LLMs) and is helpful for complex problems that would be difficult or impossible to solve in a single step.

There are also variants of CoT prompting, such as "Tree-of-Thought" and "Graph-of-Thought", which were inspired by the success of CoT prompting.

IN STYLE PROMPTING

You can ask the model for the style of the output:

- Writing as another author;

- As emotional state;

- In enthusiastic tone;

- Writing something in a sad state;

- Rewriting the following email in my style of writing;

- Rewriting the following email in the style of xy;

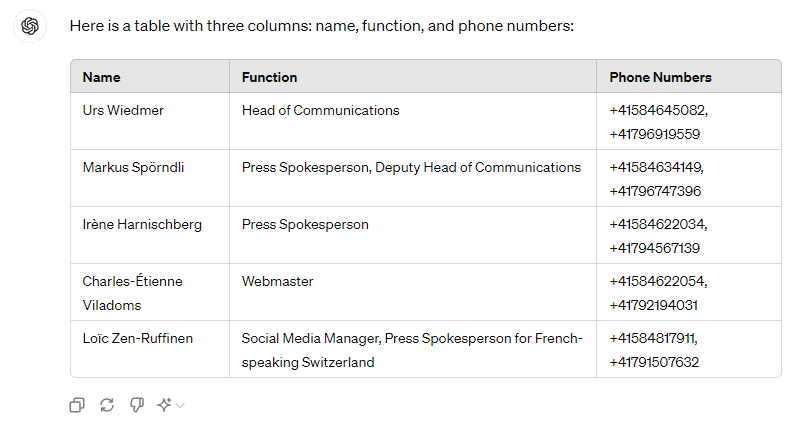

STRUCTURING YOUR DATA & USING TABLES

You can ask the model to extract information in the way is useful. You can ask to extract information from the example and structure it into markdown table or a specific format.

Prompt: Generate a table of three column: name, function, phone numbers from the text.

Text: “Urs Wiedmer

Head of Communications

+41584645082

+41796919559

Markus Spörndli

Press spokesperson

Deputy Head of Communications

+41584634149

+41796747396

Irène Harnischberg

Press spokesperson

+41584622034

+41794567139

Charles-Étienne Viladoms

Webmaster

+41584622054

+41792194031

Loïc Zen-Ruffinen

Social Media Manager

Press spokesperson for French-speaking Switzerland

+41584817911

+41791507632"

TEXT SUMMARIZATION

You can give to the model a big chunk of text and ask to summarize it.

For example, You can prompt:

Prompt: You are summarization bot any text that I provide to you summarize it, and create a title from it.

But a more effective technique could be:

Prompt: summarize the text below as a bullet point list of the the most import points.

Text: “ … “

If you need to generate a brief overview of a scientific paper don’t use generic instruction like “summarize the scientific paper” instead you should be more specific.

Prompt: generate a brief (approx. 300 words), of the following scientific paper. The summary should be understandable and clear especially to someone with no scientific background.

Paper: “ … “

TEXT CLASSIFICATION

You can use the model:

- As SPAM DETECTOR in the mail;

- To perform SENTIMENT ANALYSIS for brands and so on.

You can prompt:

You are a sentiment analysis bot. Classify any text that I provide into three classes:

- NEGATIVE

- POSITIVE

- NEUTRAL

CONSIDERATIONS ON AI GENERATED RESPONSES

The AI-generated responses aren’t always correct. You have always to verify that the AI-generated output is accurate and up-to-date. This is important of you want to make an informed decision based on the response generated.

In any case, it is a good practice to have some idea of what you are asking for in order to properly evaluate the answer obtained from AI.

BIBLIOGRAPHY/WEBOGRAPHY

[01] openai.com;

[02] “ChatGPT Teacher Tips Part 1: Role-Playing Activities” https://edtechteacher.org/chatgptroleplaying/, March 2023;

[03] Aayush Mittal, “The Essential Guide to Prompt Engineering”, https://www.unite.ai/prompt-engineering-in-chatgpt/, April 2024;

[04] Amanda Hetler, “ChatGPT”, https://www.techtarget.com/whatis/definition/ChatGPT, December 2023;

{[(homo scripsit)]} - Not generated by AI tools or platforms.